In their recent interview, Musk told Fox News show host Tucker Carlson that AI “has the potential for civilizational destruction.”

The potential may be small, Musk conceded, but it’s not trivial.

Artificial Intelligence is a branch of computer science that seeks to simulate human intelligence in computers. It is fast evolving in its ability to perform tasks that typically require an actual human. For many Americans, AI may be most often viewed when trying to solve problems through Internet searching. It’s not uncommon in the first layer of customer service, for example, to reach a “chatbot” that will try to steer customers to Internet links of helpful articles that may answer their questions before they speak to a real person.

The issues Musk is addressing are far more complex.

“When Elon is discussing kind of the grave danger to society, he's not discussing necessarily the deep fake stuff that we’re all aware of, although that is very concerning and it'll can certainly cause damage,” Jake Denton, a research associate for The Heritage Foundation, said on American Family Radio Tuesday. “He's more discussing the information systems, the ability to implement these technologies, and empower them to make decisions without human review.”

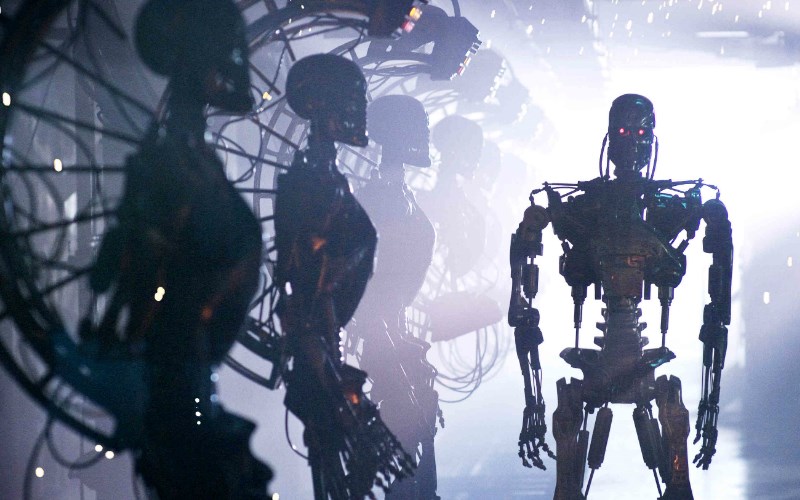

If that sounds like the villainous, world-ending "Skynet" from the Terminator franchise, that's because the concern is not far removed from that fictional scenario.

Musk has become more visible and heard since his purchase of social media giant Twitter, but he’s not a lone voice crying in the wilderness on the issue as countries race to be first to help AI reach new heights of development.

In March, Musk and others called for a six-month pause in development.

“Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us,” the group asked in an open letter posted to the website of the Musk-backed Future of Life Institute.

One of the concerns with the rapid progress of AI is the lack of checks and balances. Musk isn’t necessarily calling for an end AI development but is calling for caution.

“The real concern here is that these systems, these programs have gone out without any government oversight, without any kind of industry standards, no ethical framework,” Denton told show host Jenna Ellis. “They’re just being deployed commercially without any telling where they're headed. That’s kind of the concern that Elon was teeing up there.”

Probably not one big boom

“Civilizational destruction” isn’t likely to happen with one giant boom but could be more of a slow build to a point that causes Americans to look and wonder, “How’d we get here?” Musk points out those chances are small, but there are concerns that are much closer, more real and pressing … concerns like job loss in a number of industries.

“What’s headed down the pipe here soon is going to be the replacement of the customer service industry, the replacement of more white-collar jobs, the research assistant type roles, these jobs where you are sorting through large amounts of information synthesizing and producing an assessment of what you are reading,” Denton said.

That’s not all. Software is being developed that would allow Human Resource departments to sort through a mass of job applicants faster than ever before. Denton said it’s not a reach to see situations in the near future where hiring decisions are made by Artificial Intelligence.

That’s not all. Software is being developed that would allow Human Resource departments to sort through a mass of job applicants faster than ever before. Denton said it’s not a reach to see situations in the near future where hiring decisions are made by Artificial Intelligence.

“Say there are thousands of applicants and the HR hiring manager wants to make a quick decision on who to hire. Well, the AI is now being deployed to make quick decisions. In many instances this has already been a thing, but now it's kind of making those decisions from its own perspectives … internal programming,” Denton said.

The rush of AI development is bound to lend itself to mistakes. There was a big one in February, and it was costly for Google whose stock plummeted after its new chatbot “Bard” gave the wrong answer to a question regarding space exploration. The correct answer was confirmed by NASA.

Bard also struggled with an economic problem, Denton said.

“They end up creating a fake economics book to cite the problem, their solution. It shows the dilemma here in that on the back end it is making decisions that it believes to be right and then finding the information whether it exists or not to verify it,” Denton said.

Who’s paying attention to AI?

In the social media run-up to Bard’s release, Google described it as a “launchpad for curiosity,” but it was Google execs who were scratching their heads.

“We saw that letter that was put out by Elon Musk a few weeks ago that called for a pause and called for kind of regulatory guardrails to be put in place and for the industry to slow down. That’s really what he was concerned about is that our legislators are notoriously slow,” Denton said.

For many the AI question is whether the U.S. will step forward with regulation, and if so, what type of damage could occur while regulation plays catch-up?

“The Biden administration is soliciting comment for their future AI regulation, and meanwhile, we're still chugging away, still pushing out more and more updates to these systems, and there's no sign of slowing down,” Denton said. “The Elon interview is just another instance here of drawing attention to the problem, but is Washington really paying attention? I don't know if the answer is yes.”